Project 1: Towards Robust Deep Learning Systems against Deepfakes in Digital Forensics

Leads: Dr. Chi (FAMU) & Dr. Iyengar (FIU)

Co-Leads: Dr. Meghanathan (JSU), Dr. Reddy (GSU), Col. Miller (FIU)

Introduction

Deep learning (DL) algorithms are a primary workhorse for extracting forensics information from available data. We recently used such algorithms successfully for monitoring and improving data quality (DQ) in the Brooks-Iyengar fusion algorithms [10]. DL includes tasks like data integration, data cleaning (error detection and repair, and data curation), entity resolution and schema matching. Researchers in data curation have successfully shown deep learning techniques to be efficient when tested on state-of- the-art performance in data quality metrics.

Preliminary Work

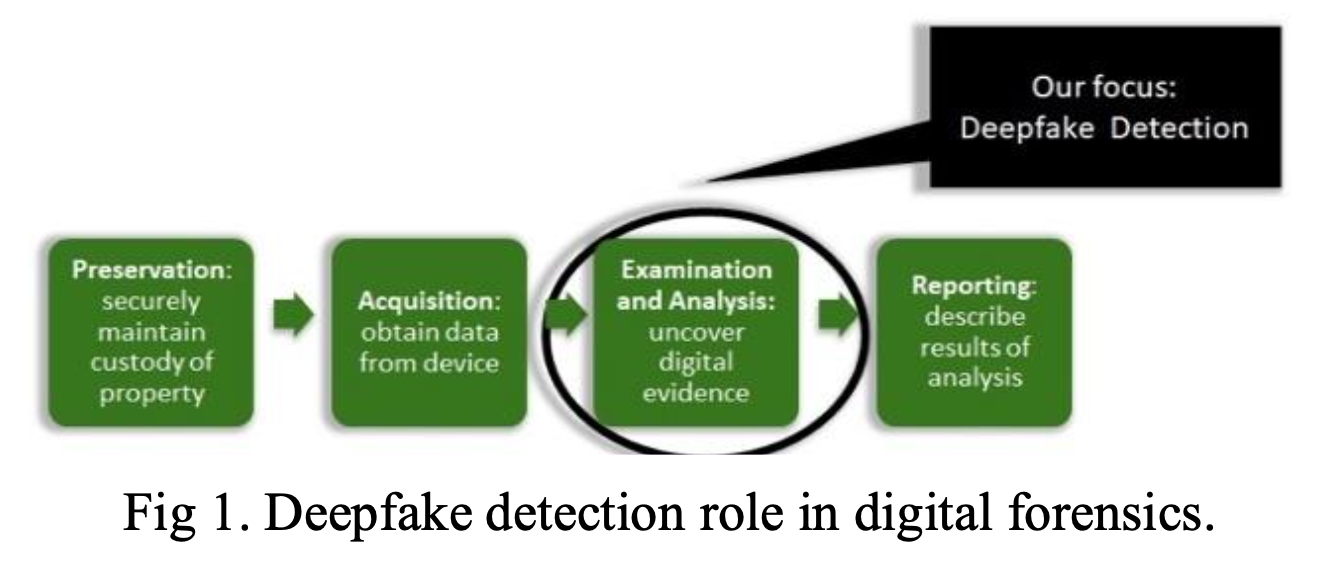

In recent years attackers have increasingly adopted deep learning to develop new sophisticated DL-based security attacks or to evade DL-based defense systems [5]. Adversarial attacks [11] are deliberately designed to exploit such vulnerabilities, causing machine learning models to make a mistake. Deepfakes are media that take a person in an existing image or video and replace them with someone else's using artificial neural networks. Deepfakes disrupt crucial digital evidence at military operational decision-making systems [3, 4]. The goal of this project is to use Deep Learning technology to develop smart forensic detection techniques to defend against Deepfakes based on evidence. We plan to achieve that goal through building a framework and a proof-of-concept implementation for identification and recording of deepfake forensic algorithms vulnerabilities, including issues arising from the use of generative adversarial networks (GAN). This proposed project will achieve three complementary objectives (a) explore how ML [12] is being utilized for deepfakes, including simulation of audio and face swap (b) develop a novel stochastic PDE-based framework to detect compromised audio and video (c) investigate fundamental capabilities, challenges, and limitations of ML in detecting deepfakes. We will take a first principles approach: instead of treating an ML algorithm as a black box and simply training it to obtain good performance, we will carefully examine representative ML- based algorithms, including their assumptions on the characteristics of the input data and the targeted problems they intend to address. The diversity and complexity of cybersecurity related forensic problems in military operational decision-making systems is depicted in Figure.

Research Objectives

The proposed research contribution is divided into 4 phases.

- Research Task 1 - We will start by exploring how ML [12] is being utilized for Deepfake. We will then try to structure and formulate new algorithms that can be used in better forensic detection of fake information and thus in the context of critical military operational decision-making systems with specific focus on video and audio datasets.

- Research Task 2 - Next, we will construct a Stochastic Neural Network (SNN) framework described by the Stochastic Differential Equations (SDE) system that can be utilized for Deepfake [3, 4]. As theoretical validation of our approach, we plan to carry out analysis to study the stability/reliability of the network structure. With the mathematical foundation for the behavior of SDE networks, we develop numerical algorithms to implement the corresponding SNN and use it to reproduce Deepfake.

- Research Task 3 - In the next step, we will study the confidence distribution generated by the SNN and the sensitivity of training data in determining the authenticity of personal characteristics. The computational effort of SNN will focus on design of scalable algorithms, allowing us to take advantage of the stochastic structure of an SDE network. It is important to identify features distinguishing real and forensic videos.

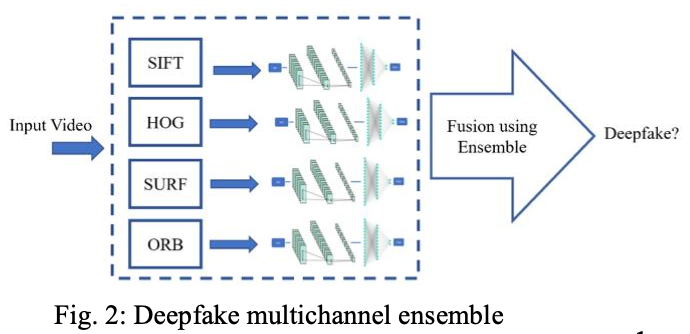

- Research Task 4 - In the final phase, we will also apply a multichannel ensemble model (Fig. 4) to detect the video anomalies. This model will apply Scale-Invariant Feature Transform (SIFT) [7], Histograms of Oriented Gradients (HOG) [6], speeded up robust features (SURF) and Oriented FAST and Rotated BRIEF (ORB) [8,9] with different CNNs. The model will capture the edges, local features, and handle blurring, noise and rotation. Individual optimized models will be developed for SIFT, HOG, SURF and ORB. Some might need deep CNN, whereas simple architecture of CNN might suffice for some. Ensemble has the capacity to combine different types of models. Furthermore, as suggested in Fig. 1, features extracted from different models can be concatenated and various AI models can be applied as well. For fusion, the stacked and weighted average will be used to select the best feature set to make the final decision.

Relevant contributions to DoD Applications

In addition to a wide range of civilian applications, the problems under consideration also have significant applications in military decision-making and cyber security systems. AI applied to perception tasks such as imagery analysis can extract useful information from raw data and equip leaders with increased situational awareness. This is the main motivation of the proposed research. In order to ensure that DoD AI systems are safe, secure, and robust, our proposed research will focus on lowering the risk of failure for existing and future DoD AI systems in the context of (a) development of SNN systems that consider uncertainties in the training procedure and produce random outputs, (b) implementations and evaluation of the developed SNN focusing on scalability to adopt the advantage of stochastic computing and, (c) design of an ensemble method for video anomaly detection.

Ultimately, this project will yield an ML-based framework for the detection of Deepfake datasets. The framework will be validated using published datasets and a real-world testbed. We also expect to gain insights into strategies for defending against other modes of Deepfakes. We will establish a GitHub page for our project, making all algorithms, code and manuscripts available online.