Project 3: Big Data Digital Forensics

Leads: Dr. Reddy (GSU)

Co-Leads: Dr. Iyengar (FIU), Dr. Meghanathan (JSU)

Introduction

Key aspects of digital forensics are use of scientific methods, collection, preservation, validation, identification, analysis, interpretation, and documentation. While a complete fingerprint or face is usually not available at the scene, the crime branch identifies related matches of criminal data through collected images or partial image data. We propose to develop algorithms to identify the closest match of fingerprint data or image data for a given partial image (fingerprint or image). In addition to algorithm development, we use GPU-based programming for real-time matching and ML techniques to automate and expedite the process. Digital Forensic models are scientifically derived and proven to be useful in the preservation, validation, identification, interpretation, documentation and presentation of digital evidence. These methods all work on information derived in facilitating the reconstruction of events from criminal or anticipated unauthorized actions involving disruption to planned operations. Project 3 is designed to preserve evidence in its most original form while performing a structured investigation by collecting, identifying, and validating digital information to reconstruct past events to minimize threats.

Preliminary Work

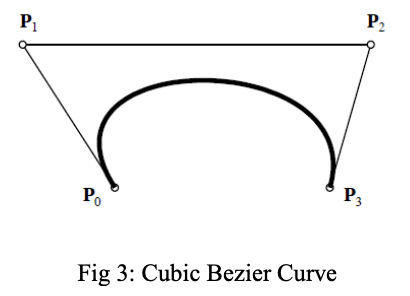

The GSU research group conducts investigations in “GPU-based architecture for latent fingerprint matching.” Latent fingerprints are difficult to analyze due to their poor image quality. The extracted latent fingerprint from a crime scene is normally 20 to 30 percent of an original fingerprint image and thus has limited minutiae that are required for a fingerprint match. We used the 3rd order Bezier curve, a smooth mathematical curve that is used to approximate curved objects such as ridges, to mark the positions on the ridges that create “minutiae.” The ridge specific markers provide potential information and ridge geometry creates new features to supplement the missing bifurcations and ridge endings. In Fig 3, the curve consists of four related points called endpoints (P0, P3) and control points (P1, P2). The control points define the shape of the curve and are positioned outside the curve. The Bezier curve does not pass through the control points. We proposed an algorithmic tool with ridge specific markers (that generates from minutiae) in the lines of handwritten character recognition. Based on our preliminary experiments, the proposed tool becomes a unique method in fingerprint recognition using latent prints [22]. Then the sampling model can be used for latent Bezier curve creation. We sample each edge in the latent fingerprint skeleton to form the Bezier sample to compare with the reference print. The process continues until the minimum acceptance (threshold) point reached between latent prints and reference prints. The comparison is the similarity between the latent and reference prints and with a match, we continue to match for all latent fingerprints. This method is unique and has been tested extensively. As the data scales in size, exponential problems of storage, organization, management and retrieval are immediate problems to consider. The new method using GPUs to help process such data and respond near real-time. Usually a general-purpose GPU cluster is suggested to process petabytes of data in seconds. A system with 16 GPUs performs 160 Trillion calculations per GPU per hour (2.5 Quadrillion calculations per hour) [20]. In such an environment, a process taking 200hours can be completed in an hour. For details refer to our earlier publications in this field [1-12]. A more extensive explanation of our preliminary research, including all preliminary work can be found in Appendix 5.

Research Objectives

To preserve any evidence in its most original form while performing a structured investigation through collecting, identifying, and validating the digital information it is necessary to reconstruct past events for minimizing the threats. We propose to handle the problem through “Large Scale Data Visualization” which involves use of cloud technology and GPU based programming for real-time solutions. High performance computing (GPU-based programming) has proven to produce results significantly faster than traditional computing. The outcomes will be presented as visual evidence for further investigation to resolve criminal cases.

- Task 1 – Retrieving forensic evidence from Log Files - It is a known fact that once the attacker finishes executing the action, the attacker attempts to remove (destroy) the evidence. Forensic investigations on virtual machines is challenging. We propose a model to formulize forensic analysis of event and trace logs [23, 24] that can give an insight into the access timestamps, event sequences, and possible attack scenario. We simulate and synthesize the attack scenario using the logs and event sequences collected to replicate/orchestrate the synchronous structure of the cyberattacks and get better insights into how these events can be averted in the future.

- Task 2 - Forensic model using Hadoop Distributed File System (HDFS) - The volume of available data requires careful processing of real time data-streams from various sources. The incoming stream of data can have

redundancies that need to be classified and filtered. This classification process enables us to identify the content of suspected phishing streams. The existing state-of-the-art tools lack capability in processing big forensic

data sets. We propose a cloud-based architecture for collecting and analyzing big forensic datasets to create an economic and effective model. The model will utilize a cloud framework designed as Forensics-as-a-Service (FaaS)

and be available to the investigators working on big datasets. Input parameters of stream of data includes log file entries (security logs), file system metadata structures, registry information, application logs, and network

packets. Metadata helps to identify the association artifacts that can use used to investigate and verify fraud, abuse, and many other types of cybercrimes [9-14]. The Semantic Web-Based Framework for Metadata Forensic Examination

and Analysis does the data acquisition and prevention, data examination, and data analysis. The digital forensics model includes the above noted characteristics. This data stream and fusion of information will be automated

through the application of AI and Machine Learning techniques.

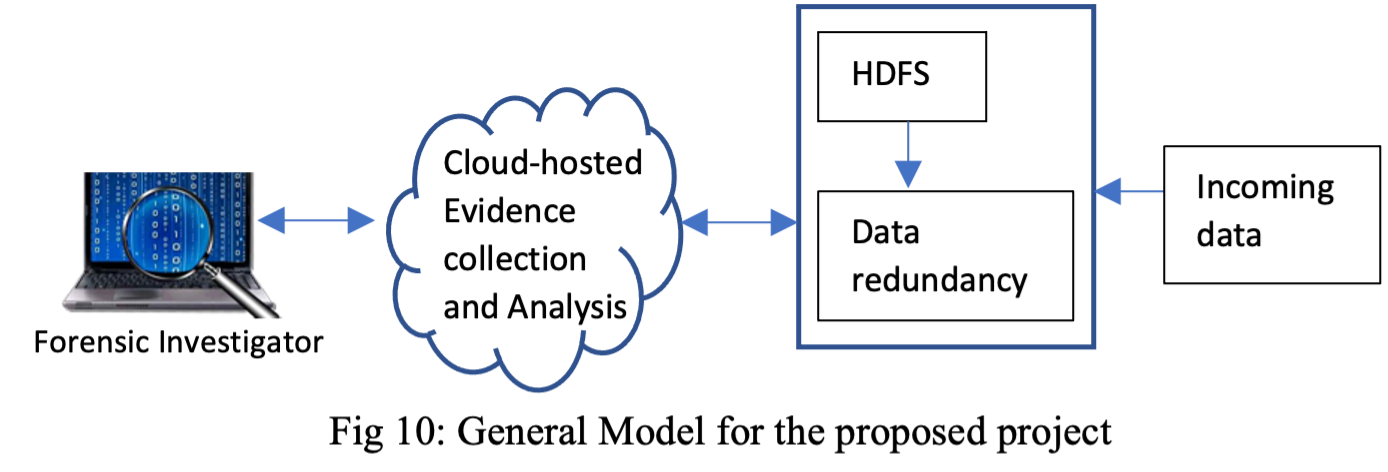

More specifically in this task, we propose a big data forensic model using HDFS and a cloud based conceptual model for reliable forensic investigation on big data streams. Google’s HDFS is designed to store very large datasets reliably and to stream those data sets at high bandwidth to user applications. The general model is given in Fig 4. Big data forensics is a challenge and requires on-line, real-time results. The proposed Hadoop framework will be used for large volumes of continuous data in real-time processing. Different files are generated by the proposed HDFS model during the process for forensic data analysis. The model includes redundancy handling, evidence collection, and cloud-based evidence instances [25]. - Task 3: GPU-based Architecture for Latent Fingerprints - We propose a Python-based Anaconda Accelerate system using GPU architecture to respond with lower latency and better throughput than MapReduce [1,16]. This

will be further enhanced using Deep Learning Techniques, with packages like TensorFlow, which will integrate the Bezier ridge descriptors to enhance matching of partial latent against reference fingerprints collected in the

previous task. This will be achieved real-time enhancement of the current techniques that use the minutiae-based algorithms with a matching of ridge patterns, providing a deployable solution in critical battlefield operations.

We are currently using Docker container (software-as-a-service) for our research. The codebase developed for our project will be available in GitHub/BitBucket or other repositories with fork and push capabilities provided with permission to all collaborators including students.