Project 4: Drone Forensics with Machine Learning based Fingerprinting and Blockchain

Leads: Dr. Akkaya (FIU)

Co-Leads: Drs. Jose Almirall, S.S. Iyengar and Col. Jerry Miller (FIU), Dr. Mirzanur Rahman (IBM)

Introduction

With the widespread use of smart phones and drones, recording video from our environments turned into a common practice. Particularly, drones are also being used by law enforcement while responding to a call or pursuing crimes [1,2]. In these applications, video scene provides significant visual information to analyze and interrogate a crime and resolve any dispute about an incident because it has a spatio-temporal context that reveals detailed information about the case [3,4]. As video data is to be used as a type of evidence in some scenarios, the authenticity of video information is critical to make decisions and reach conclusions. However, video forgery techniques are now very sophisticated that even a typical user can change a video scene by using off-the-shelf open-source video editing tools that are supported by machine learning. In addition to manipulating stored video data, the wireless channels could be another source for data tampering [5] since the videos may be altered while being transmitted through the wireless communication channels. Finally, there needs to be a mechanism to prove that this video data indeed came from the claimed drone. We propose mechanisms to address the above two problems. The first approach deals with the integrity of the video data while the second approach handles the source verification.

Preliminary Work

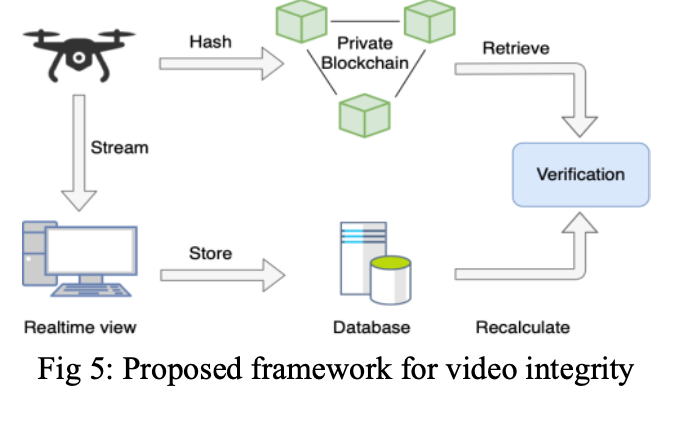

Video Integrity Forensics: In order to prove the integrity/authenticity for video recordings, various methods have been proposed. For instance, as digital piracy has been an issue for a long time, some of the techniques used to fight against piracy such as watermarking [6] can be used by embedding a hidden signature into the video. This signature can be analyzed to determine whether the video has been tampered or not. However, drone cameras are not equipped with this capability, and watermarks are prone to various transformational attacks such as scaling, shearing [7] as well as removal, cryptographic, geometric, and protocol attacks [8]. Another method for integrity verification is hashing where a hash is computed and delivered with the video. This is a simple and cost-efficient technique as long as the used hash functions are collision resistant. However, there is also a need to maintain the integrity of the hash from the time it leaves the camera to its storage in a server. To this end, we advocate the use of blockchain in this project to offer an integrity framework. Our framework shown in Fig. 5 includes maintaining the integrity of video data by getting the hash and storing it in a permissioned blockchain and being able to receive the original video that came out of the drone in a lossless way while providing real-time streaming. The challenge here is to match the received video at the server side with the one that left the drone. We know that TCP is not suitable for video streaming since it will introduce jitter to video frames when there is any loss during transmissions. In order to handle real-time streaming from a drone to a remote server, we propose a UDP-based reliable data transmission that enables low latency streaming.

Research Objectives

We propose to further develop video integrity forensics for incorporation as a digital forensics tool for drone video analysis. Specifically, we will offer an application-level reliability mechanism to ensure that the video arrives at the destination without any losses. In addition, we will address the video Source Proof problem to tie the video to a specific drone.

- Research Task 1: Video Source Proof - Given a video whose integrity is already proven using our first approach, the problem is to prove that this came from the camera of the drone that was used in recording, and not fabricated somewhere else. In many cases, related information (meta-data) of the physical camera of interest may be unavailable or unknown and makes it impractical to use hardware signatures and template-based identification alone, which gives rise to the use of feature-based learning within image fingerprinting techniques of Photo Response Non-Uniformity (PRNU) and Noiseprint [9]. Photo Response Non- Uniformity or PRNU refers to a type of noise pattern present only in digitally taken images as a result of the way light-based or light sensitive sensors such as Complementary Metal Oxide Semiconductor (CMOS) and Charged-Coupled Device (CCD) convert photons into electrons. Intuitively, given a uniform exposure of light onto a camera sensor, we expect each pixel to output the same response, however, in the manufacturing process of these sensors, small imperfections may arise due to sample variation. Additional differences may manifest in the sensor’s cell size and substrate material. Hence, we can measure the PRNU as the difference between the true value of the sensor in comparison to a uniform response. Since errors in the manufacturing process are inherent and irreversible, we are left with a unique physical “fingerprint” that can be extracted for use in DF. Noiseprint is another type of extractable noise pattern similar to the PRNU, however, it removes high-level objects and artifacts from the image model, leaving a more robust “fingerprint” that is insensitive to varying conditions of temperature, humidity, and light intensity.

Specific Contribution

Our proposed approach is a unique approach, unlike other works where the focus is to differentiate between camera models, camera manufacturers or both camera models and manufacturers simultaneously in a multi-class classification setting. Instead, we focus on a binary classification setting, utilizing the aforementioned image fingerprinting techniques in order to distinguish between two of the same class of camera devices (i.e., one that originates from the same model or same manufacturer or both). We propose using Deep Learning based on two Neural Network models: a simple architecture and a deep learning architecture. Additionally, two image formats (JPEG and TIFF) will be utilized in our approach in order to identify the robustness of the model’s classification ability when evaluated under images with lossy and lossless compression conditions. We plan to also explore several other machine learning techniques that may be applicable in our scenario, of which includes Triplet-Loss based Siamese Architecture, Decision Trees, Random Forest, and K-Nearest Neighbor. The results will be tested on manufacturers/models such as Canon, Nikon, Samsung, Fujifilm, Panasonic, Apple, etc. to provide a full baseline and to validate its interoperability across all camera models or manufacturers.

Project Impact

This project will have a significant impact on improving harmonization of digital/forensic science to strengthen knowledge management while increasing depth of digital traces/quality of forensic results to identify cyber-forensic and forensic events. Also, identifying records and evidence in digital systems to establish contexts, provenance, relationships and meaning. Activities will result in a reference architecture and specific guidance for deploying in battlefield operations.